U.S. Information & World Report revealed its first rankings of U.S. schools in 1983. In that difficulty, Oberlin was ranked fifth among the many nation’s prime liberal arts schools. Again then, to find out a school’s rating, U.S. Information did a easy factor: it merely requested different faculty presidents what they thought of their opponents. Contemplating Oberlin’s lengthy historical past as one of many world’s finest liberal arts schools, the end result was hardly a shock.

For a number of years, Oberlin held its place within the rankings. In 1987, it nonetheless ranked fifth. However in 1988, Oberlin fell out of the highest 10, and within the 2011 rankings positioned twenty third.

How ought to we interpret this pattern in Oberlin’s U.S. Information rankings? Is Oberlin now not nearly as good because it as soon as was? Or did its repute change amongst faculty leaders even whereas its high quality and efficiency remained comparatively unchanged—and even improved?

Extra importantly, how a lot weight ought to we give to the rankings? Ought to we even think about them in any respect?

In 1988, U.S. Information started utilizing a extra goal system to find out its faculty rankings. This system nonetheless weights a repute survey of school leaders (and new this yr, highschool counselors) most closely (22.5 p.c). However the system now additionally considers commencement charges and freshman retention charges (20 p.c), school sources resembling class dimension and college salaries (20 p.c), admissions selectivity (15 p.c), institutional spending per pupil (10 p.c), and the speed of alumni giving (5 p.c). It additionally tries to measure worth added by predicting a commencement fee based mostly on selectivity and the proportion of low-income college students, and together with the distinction in its system (7.5 p.c).

This system marked the start of Oberlin’s decline within the rankings within the late Eighties. Most of the indicators chosen by U.S. Information have been unfavorable to Oberlin’s dimension and strengths. For instance, together with institutional spending per pupil is much less favorable as a result of Oberlin, at practically 3,000 college students, is roughly twice as giant as Swarthmore and Amherst. Together with the admissions fee can also be much less favorable, as a result of though Oberlin receives extra functions than Carleton and Grinnell, it has many extra locations to fill in its incoming class.

Apparently, a lot of Oberlin’s indicators have improved considerably through the years. From 1999 to 2006, the acceptance fee fell from 54 p.c to 34 p.c, and the share of scholars within the prime 10 p.c of their highschool class rose from 54 p.c to 68 p.c. The SAT rating of scholars within the twenty fifth percentile rose by 70 factors. These constructive strikes have been largely mitigated, nonetheless, by comparable will increase on the different prime 25 liberal arts schools. The growing dimension of the U.S. college-going cohort, the growing competitiveness of prime highschool college students, and the maturation of the nationwide faculty market have all been vital components in bettering the tutorial profile of elite schools.

An important issue within the change of a school’s repute wasn’t modifications in its monetary efficiency, the tutorial markers of its incoming class, or the standard of its instruction.

However the unusual half is what occurred to Oberlin’s repute amongst different faculty presidents and provosts. Over the course of the Nineties, Oberlin’s repute rating—the typical of a 1 to five ranking offered by all of the survey responses—fell in every successive yr. So whereas Oberlin considerably improved the tutorial accomplishments of its incoming college students, the school’s repute amongst its friends declined. Did faculty presidents have some insider details about a change in Oberlin’s high quality? Have been they trying extra fastidiously on the indicators of Oberlin’s efficiency and judging accordingly? And what in regards to the different liberal arts schools and analysis universities—can we verify an analogous sample?

A couple of years in the past, I made a decision to analyze this query with Nicholas Bowman, a postdoctoral researcher on the College of Notre Dame. We wished to see if we may determine what led to will increase or decreases in a school’s repute rating. For instance, have been folks trying on the admissions and monetary indicators that U.S. Information makes use of to calculate its system? Or have been different forces at work?

To take a look at this query, we entered the revealed rankings information for the highest 25 liberal arts schools and analysis universities, each in 1989, the primary yr that every one the info was obtainable, and in 2006, then the newest information obtainable. After controlling for the vital components, we got here to a startling conclusion. An important issue within the change of a school’s repute amongst friends wasn’t modifications in its monetary efficiency, the tutorial markers of its incoming class, or the standard of its instruction. It was the rating itself.

This end result turns our desirous about rankings on its head. The creators of rankings ask folks about repute as a result of they need to embody it as an unbiased indicator of a school’s high quality. They suppose tutorial consultants know extra about peer establishments than could also be noticed in mere numbers, and thus a school’s repute ought to assist form the trajectory of its rating. However the precise reverse is true; the rating shapes the trajectory of a school’s repute, to the purpose the place rankings and repute are now not unbiased ideas. To place it merely, the rating of a school has grow to be its repute amongst different faculty leaders—the identical leaders who bemoan the affect of school rankings.

We discovered an analogous end result once we seemed on the world rankings of universities from world wide, that are revealed by Instances Increased Schooling, {a magazine} from the Instances of London. Earlier than the rankings have been revealed, should you requested the next training professional to check the standard of the College of Sao Paolo to the Nationwide College of Singapore, few if anybody may inform you something of curiosity. It was nearly unattainable: these are universities in two utterly completely different nationwide methods, with completely different targets and goals, which don’t search to compete with each other. It will be laborious to seek out somebody who had even set foot on each campuses.

Undoubtedly, a brand new set of worldwide rankings would fill the knowledge vacuum. So when the primary world rankings have been revealed by the Instances, the outcomes have been broadly learn world wide, notably amongst authorities leaders desirous about how their methods in contrast. All of the sudden they’d a solution, neatly contained in a single desk of the highest 200 universities on the planet. Unsurprisingly, the US did very properly on the checklist, taking the vast majority of locations within the prime 10.

Nick Bowman and I have been very to see what impact this checklist would have on subsequent surveys of college repute. Within the Nineteen Seventies, psychologists Amos Tversky and Daniel Kahneman did groundbreaking work (that in the end received the Nobel Prize) on an idea they labeled the “anchoring-and-adjustment heuristic.” Tversky and Kahneman carried out an experiment. They took a wheel with a random set of numbers and spun it in entrance of random topics separately. After a quantity got here up, they requested the topic a easy query: What number of nations are in Africa?

Then they seemed on the information and located a tremendous end result: the variety of African nations named by their topics was a lot nearer to the random quantity spun on the wheel than could be anticipated by likelihood. They proved that even a completely arbitrary quantity may have a big affect on later numerical judgments made by folks about utterly various things. The arbitrary quantity was an anchor, and folks adjusted their pondering accordingly. Nick and I believed we’d see the identical precept working in our information.

Identical to we did with U.S. Information, we took the Instances rankings information for the 200 prime universities and tracked the outcomes over time. Within the first rating, there have been usually massive variations between a college’s repute rating and the rating it obtained from Instances Increased Schooling. In every subsequent yr, the distinction between one yr’s rating and the subsequent yr’s repute rating grew to become much less and fewer. All of the sudden there was a transparent consensus on a college’s repute, be it Sao Paolo or Singapore. The place as soon as there was ambiguity, now there was certainty.

However this doesn’t but reply the query: How a lot do rankings matter? There are a lot of issues with rankings—the issues we recognized in repute being excessive amongst them—however that doesn’t imply they don’t have important results on faculty directors, alumni, college students, dad and mom, and policymakers (in brief, everybody who has affect over the way forward for a school, Oberlin included).

It seems that the affect on college students is pretty weak. Yearly, first-year faculty college students are surveyed about their attitudes and opinions by the Increased Schooling Analysis Institute on the College of California at Los Angeles (UCLA). In 2006, the researchers discovered that solely 16 p.c of scholars reported that rankings had had a powerful influence on their determination. Practically half of all college students mentioned that rankings weren’t vital to them in any respect.

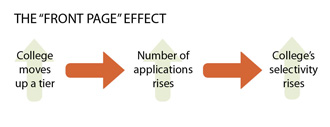

However these are solely self-reports about college students’ beliefs—what about their precise selections? Nick and I made a decision to check this too. all of the admissions information for nationwide analysis universities from 1998 to 2005, we discovered that there was a considerable enhance for schools after they moved from one tier to a different, both up or down. Once they went up a tier, the variety of functions went up, and with it, the school’s selectivity. (We referred to as this “the entrance web page impact.”) However going up just some locations within the numerical rating had solely a really small impact for nationwide analysis universities.

And we discovered no admissions impact for liberal arts schools in any respect. The truth is, the strongest affect we discovered on admissions to liberal arts schools was tuition value, and the impact was constructive! That’s, the upper the rise in tuition, the higher the admissions outcomes seemed the next yr—a part of a phenomenon usually dubbed “the Chivas Regal impact.”

Rankings usually grow to be a self-fulfilling prophecy.

If the results on college students are weak, what about different entities that present funds to larger training, like foundations, business, states, and federal authorities analysis applications? It seems the results listed here are additionally pretty minimal, and solely apply to sure suppliers. When the funder is from inside larger training, we see that will increase in rating do have a constructive impact. For instance, a school’s alumni appear extra more likely to donate, and out-of-state college students usually tend to enroll and pay larger tuition. The college school making selections on federal analysis functions appear to be equally affected.

However when the funder is from outdoors of upper training, resembling non-public foundations, business, or state governments, these funders appear comparatively impervious to modifications in a school’s rating. Actually foundations and business, very like college students and alumni, seem inclined to fund the extra prestigious over the much less prestigious. However they don’t observe modifications in rating from yr to yr like these in larger training do.

This result’s pretty ironic. Faculty rankings are designed primarily to offer details about schools to folks outdoors the upper training system. But the one folks strongly influenced by them are these inside the larger training system—faculty presidents, college students, school, and the school’s personal alumni.

Sadly, this isn’t the message that has been obtained by faculty directors across the nation. For a lot of faculty leaders, journal rankings are a significant type of accountability. When the rankings go up, there’s ample alternative for celebration, press releases, and kudos. When the rankings go down, there are recriminations and calls for for modifications that may enhance schools on precisely the type of indicators that U.S. Information chooses to measure.

The result’s that rankings usually grow to be a self-fulfilling prophecy. As sociologists Wendy Espeland and Michael Sauder have argued, based mostly on interviews with greater than 160 enterprise and legislation college directors, folks naturally change their habits in response to being measured and held accountable by others. As well as, they are usually disciplined by these measures, in that they tackle the values of those that are measuring them. It doesn’t matter if the results of rankings are actual, as a result of self-fulfilling prophecies are the results of a false perception made true by subsequent actions. In our case, we have been capable of show that this perception influenced directors’ beliefs about faculty repute, which gave the rankings much more energy than they’d initially.

The darkish aspect of those self-fulfilling prophecies is the robust incentive to govern the info offered to calculate rankings. As sociologists Kim Elsbach and Roderick Kramer discovered with enterprise faculties, rankings which might be inconsistent with a school’s self-perception usually result in conflicts over mission, targets, and id. This could lead schools to attempt to carry the exterior rating again in step with their self-perception—by any means essential.

In recent times, we now have seen quite a few examples of rankings manipulation within the press. Catherine Watt, a former administrator at Clemson College, revealed at a convention that senior officers there sought to engineer every statistic utilized by U.S. Information to fee the college, pouring substantial funds into the hassle. She additionally revealed that senior leaders manipulated their U.S. Information repute surveys to enhance Clemson’s place. Inside Increased Ed, a web based journal for school leaders, later confirmed that Clemson president James F. Barker had rated Clemson (and solely Clemson) within the highest class, and rated all different schools in decrease classes.

The Gainesville Solar subsequently used a Freedom of Data Act request to find the scores offered by public faculty presidents in that state. These papers confirmed that College of Florida president Bernard Machen had named his college equal to Harvard and Princeton, and rated all different Florida public schools within the second-lowest class. It’s unclear how systematic this manipulation is, as a result of U.S. Information refuses to launch even redacted copies of the surveys, and won’t say what number of surveys usually are not counted as a consequence of proof of tampering.

We’ve got seen that rankings will matter for so long as faculty directors proceed to consider they matter. The place does that go away the way forward for faculty rankings? Actually it might be silly to think about they are going to disappear anytime quickly. The truth is, proof reveals that rankings have grow to be steadily extra highly effective over time amongst selective schools. And inside legislation and enterprise faculties, the affect of rankings borders on mania.

One constructive growth is the proliferation of rankings from quite a lot of sources. Washington Month-to-month tries to fee schools on their values, by trying on the share of scholars who qualify for Pell Grants and be a part of the Peace Corps. Yahoo! has rated schools based mostly on the standard and pervasiveness of their know-how. The Advocate ranks faculties on their high quality of life for LGBT college students. Instances Increased Schooling’s World College Rankings competes in a worldwide market of rankings with Jiao Tong College in Shanghai and a brand new effort coordinated by the German authorities. In the end, this will likely have the impact of each growing the match between a pupil’s wants and the rating they look for info, and bettering the standard of rankings info as every rating competes for dominance.

Even U.S. Information more and more differentiates its rankings. We centered on the nationwide analysis universities and liberal arts schools, however they supply rankings in every kind of classes, in order that one might be the highest “grasp’s complete college within the Southeast” or the highest “public liberal arts faculty within the West.” This differentiation may enable schools to decide on the place they compete and on what foundation, offering considerably extra flexibility of their selections.

However I hope that stable proof—offered by my very own analysis in addition to by many others—will in the end persuade directors, trustees, and alumni to show down the amount on faculty rankings. Rankings are merely items of knowledge offered by sources with specific targets and agendas. Everybody acknowledges that they’re flawed. It’s essential for schools to maintain rankings in perspective and never lose sight of their most vital values.

In the end, rankings don’t measure the issues that actually matter in a school expertise. Rankings won’t ever inform us how excessive the standard of Oberlin’s educating is, how dedicated Oberlin’s professors are to undergraduate training, and the way a lot worth Oberlin college students will achieve from their faculty expertise. Rankings won’t ever assist us perceive how Oberlin graduates use their training to affect public coverage or change the world via their entrepreneurial endeavors. And there’s nothing within the present analysis to counsel that Oberlin would profit considerably from modifications whose solely impact could be to enhance its rating.

That is the lesson for Oberlin. Oberlin may enhance its rating by reducing its enrollment, manipulating its admissions statistics, and sending shiny propaganda nationwide to those that full the repute surveys. Or Oberlin may proceed to concentrate on the issues which might be actually vital, like bettering commencement and retention charges, participating alumni, and focusing sources on the scholar expertise. This might enhance rankings too, after all, however solely as a aspect profit to reaching Oberlin’s true potential.

Michael Bastedo ’94 is an affiliate professor on the Middle for the Examine of Increased and Postsecondary Schooling at College of Michigan. His analysis is out there at www.umich.edu/~bastedo.