AUSTIN, Texas — Individuals with aphasia — a mind dysfunction affecting about 1,000,000 individuals within the U.S. — wrestle to show their ideas into phrases and comprehend spoken language.

A pair of researchers at The College of Texas at Austin has demonstrated an AI-based software that may translate an individual’s ideas into steady textual content, with out requiring the individual to grasp spoken phrases. And the method of coaching the software on an individual’s personal distinctive patterns of mind exercise takes solely about an hour. This builds on the group’s earlier work making a mind decoder that required many hours of coaching on an individual’s mind exercise because the individual listened to audio tales. This newest advance suggests it could be potential, with additional refinement, for mind pc interfaces to enhance communication in individuals with aphasia.

“Having the ability to entry semantic representations utilizing each language and imaginative and prescient opens new doorways for neurotechnology, particularly for individuals who wrestle to provide and comprehend language,” mentioned Jerry Tang, a postdoctoral researcher at UT within the lab of Alex Huth and first creator on a paper describing the work in Present Biology. “It offers us a solution to create language-based mind pc interfaces with out requiring any quantity of language comprehension.”

In earlier work, the group skilled a system, together with a transformer mannequin like the type utilized by ChatGPT, to translate an individual’s mind exercise into steady textual content. The ensuing semantic decoder can produce textual content whether or not an individual is listening to an audio story, eager about telling a narrative, or watching a silent video that tells a narrative. However there are limitations. To coach this mind decoder, individuals needed to lie immobile in an fMRI scanner for about 16 hours whereas listening to podcasts, an impractical course of for most individuals and probably inconceivable for somebody with deficits in comprehending spoken language. And the unique mind decoder works solely on individuals for whom it was skilled.

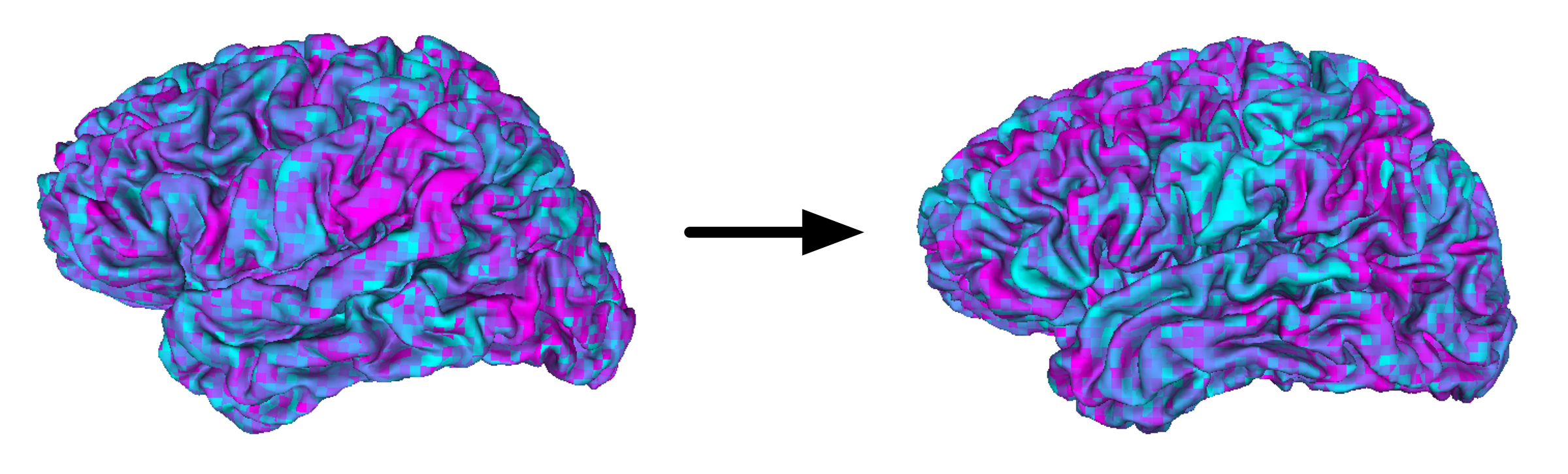

With this newest work, the group has developed a technique to adapt the prevailing mind decoder, skilled the arduous approach, to a brand new individual with solely an hour of coaching in an fMRI scanner whereas watching quick, silent movies, reminiscent of Pixar shorts. The researchers developed a converter algorithm that learns find out how to map the mind exercise of a brand new individual onto the mind of somebody whose exercise was beforehand used to coach the mind decoder, resulting in comparable decoding at a fraction of the time with the brand new individual.

Huth mentioned this work reveals one thing profound about how our brains work: Our ideas transcend language.

“This factors to a deep overlap between what issues occur within the mind while you take heed to any individual inform you a narrative, and what issues occur within the mind while you watch a video that’s telling a narrative,” mentioned Huth, affiliate professor of pc science and neuroscience and senior creator. “Our mind treats each sorts of story as the identical. It additionally tells us that what we’re decoding isn’t really language. It’s representations of one thing above the extent of language that aren’t tied to the modality of the enter.”

The researchers famous that, simply as with their unique mind decoder, their improved system works solely with cooperative individuals who take part willingly in coaching. If individuals on whom the decoder has been skilled later put up resistance — for instance, by pondering different ideas — outcomes are unusable. This reduces the potential for misuse.

Whereas their newest check topics had been neurologically wholesome, the researchers additionally ran analyses to imitate the patterns of mind lesions in individuals with aphasia and confirmed that the decoder was nonetheless capable of translate into steady textual content the story they had been perceiving. This implies that the method may ultimately work for individuals with aphasia.

They’re now working with Maya Henry, an affiliate professor in UT’s Dell Medical College and Moody Faculty of Communication who research aphasia, to check whether or not their improved mind decoder works for individuals with aphasia.

“It’s been enjoyable and rewarding to consider find out how to create probably the most helpful interface and make the model-training process as simple as potential for the individuals,” Tang mentioned. “I’m actually excited to proceed exploring how our decoder can be utilized to assist individuals.”

This work was supported by the Nationwide Institute on Deafness and Different Communication Issues of the Nationwide Institutes of Well being, the Whitehall Basis, the Alfred P. Sloan Basis and the Burroughs Wellcome Fund.