AUSTIN, Texas — Utilizing generative synthetic intelligence, a staff of researchers at The College of Texas at Austin has transformed sounds from audio recordings into street-view photographs. The visible accuracy of those generated photographs demonstrates that machines can replicate human connection between audio and visible notion of environments.

In a paper revealed in Computer systems, Atmosphere and City Methods, the analysis staff describes coaching a soundscape-to-image AI mannequin utilizing audio and visible information gathered from a wide range of city and rural streetscapes after which utilizing that mannequin to generate photographs from audio recordings.

“Our examine discovered that acoustic environments include sufficient visible cues to generate extremely recognizable streetscape photographs that precisely depict completely different locations,” mentioned Yuhao Kang, assistant professor of geography and the surroundings at UT and co-author of the examine. “This implies we will convert the acoustic environments into vivid visible representations, successfully translating sounds into sights.”

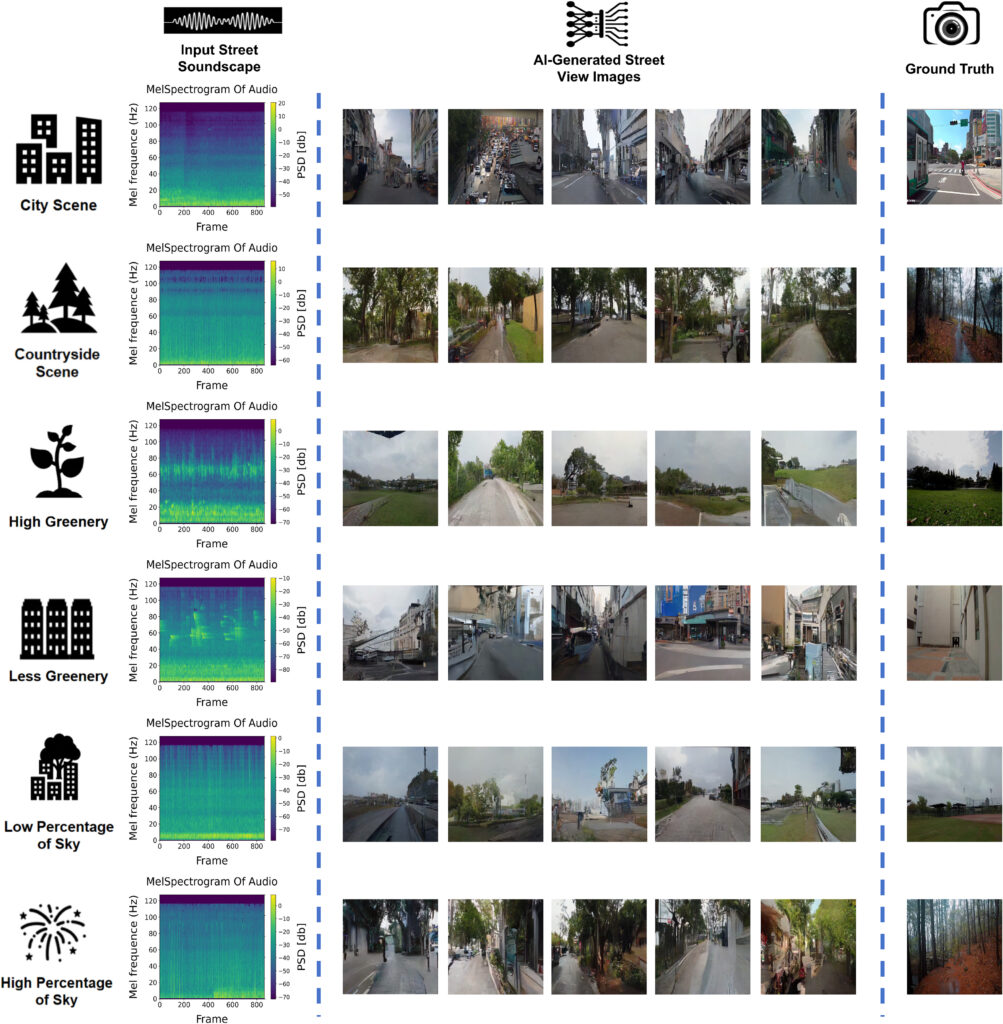

Utilizing YouTube video and audio from cities in North America, Asia and Europe, the staff created pairs of 10-second audio clips and picture stills from the assorted areas and used them to coach an AI mannequin that would produce high-resolution photographs from audio enter. They then in contrast AI sound-to-image creations produced from 100 audio clips to their respective real-world photographs, utilizing each human and laptop evaluations. Pc evaluations in contrast the relative proportions of greenery, constructing and sky between supply and generated photographs, whereas human judges had been requested to appropriately match one among three generated photographs to an audio pattern.

The outcomes confirmed sturdy correlations within the proportions of sky and greenery between generated and real-world photographs and a barely lesser correlation in constructing proportions. And human individuals averaged 80% accuracy in choosing the generated photographs that corresponded to supply audio samples.

“Historically, the power to check a scene from sounds is a uniquely human functionality, reflecting our deep sensory reference to the surroundings. Our use of superior AI strategies supported by massive language fashions (LLMs) demonstrates that machines have the potential to approximate this human sensory expertise,” Kang mentioned. “This means that AI can lengthen past mere recognition of bodily environment to doubtlessly enrich our understanding of human subjective experiences at completely different locations.”

Along with approximating the proportions of sky, greenery and buildings, the generated photographs usually maintained the architectural kinds and distances between objects of their real-world picture counterparts, in addition to precisely reflecting whether or not soundscapes had been recorded throughout sunny, cloudy or nighttime lighting circumstances. The authors notice that lighting info may come from variations in exercise within the soundscapes. For instance, visitors sounds or the chirping of nocturnal bugs may reveal time of day. Such observations additional the understanding of how multisensory components contribute to our expertise of a spot.

“If you shut your eyes and hear, the sounds round you paint footage in your thoughts,” Kang mentioned. “For example, the distant hum of visitors turns into a bustling cityscape, whereas the mild rustle of leaves ushers you right into a serene forest. Every sound weaves a vivid tapestry of scenes, as if by magic, within the theater of your creativeness.”

Kang’s work focuses on utilizing geospatial AI to review the interplay of people with their environments. In one other latest paper revealed in Nature, he and his co-authors examined the potential of AI to seize the traits that give cities their distinctive identities.